Jan AI: Using Desktop LLMs with Langchain or llama-index

Jan allows you to deploy and run LLMs on your Windows or macOS computer! 16 Gigabytes of memory and a M1 chip was enough for me to run Mistral locally. Now how do you take Jan and use libraries like Langchain?

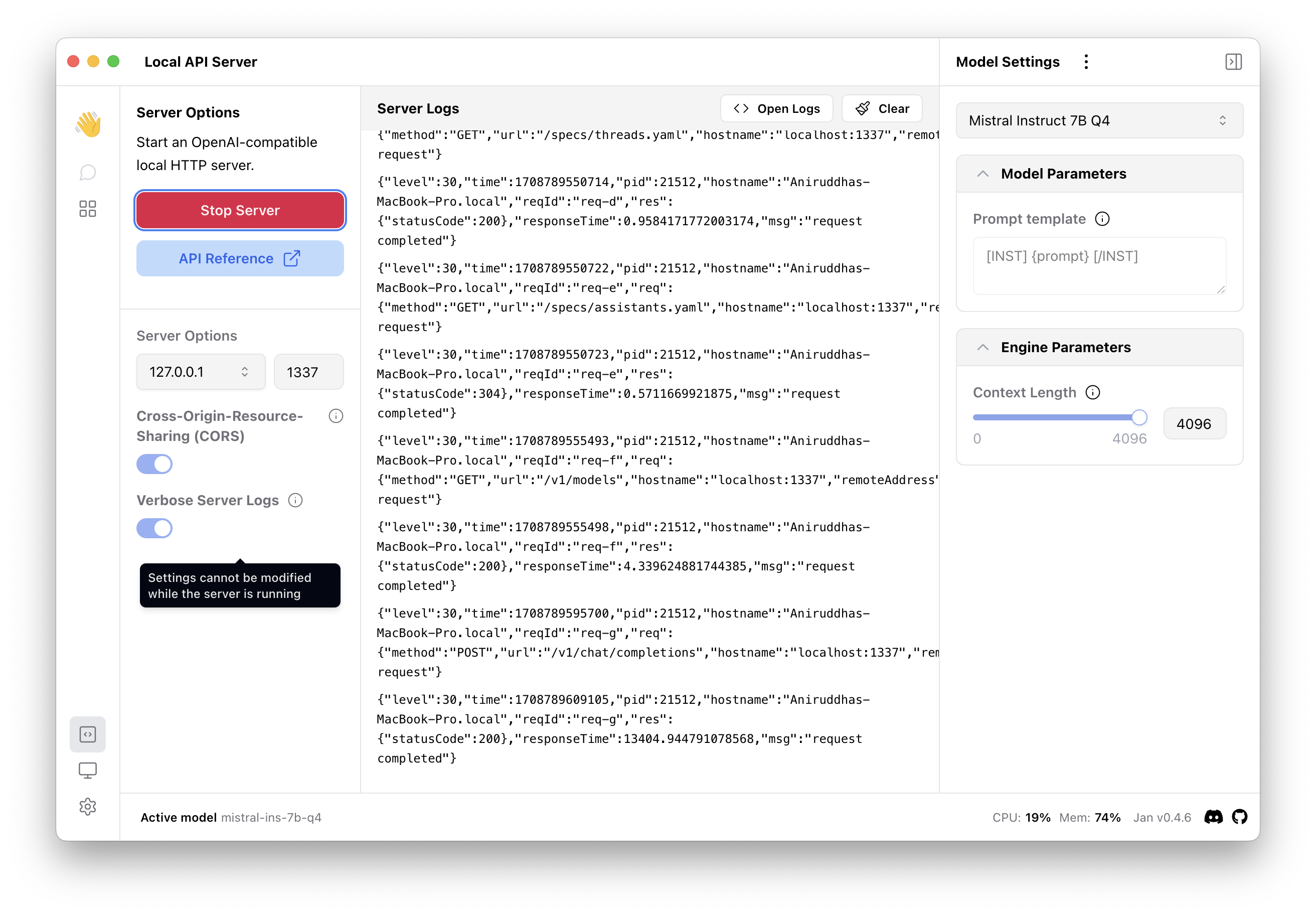

Local API Server

Click the [<>] icon to open the API Server pane. Click "Start Server".

OpenAI Environment Variables

Jan AI exposes an Open-AI compatible /chat/completions endpoint. That means, you can convert your existing LLM applications to run on locally hosted models through configuring the OPENAI_API_BASE environment variable.

export OPENAI_API_BASE=http://127.0.0.1:1337/v1

Granted, if your library or application relies on the Python/Node.js openai package.

If you are using Langchain or llama-index, this is enough!

And for the API key, well you don't need one. It can be any random value.